AI Ethics and Governance: Building Responsible Frameworks

Key Takeaways

Ethical AI Framework: Develop comprehensive AI ethics policies that balance innovation with responsibility while meeting regulatory requirements and maintaining stakeholder trust.

Governance Structure: Implement robust governance frameworks with clear accountability, oversight committees, and risk management protocols to align AI with ethical principles and business objectives.

Transparency & Accountability: Establish mechanisms that clarify AI decision-making processes, assign responsibility for outcomes, and build stakeholder confidence.

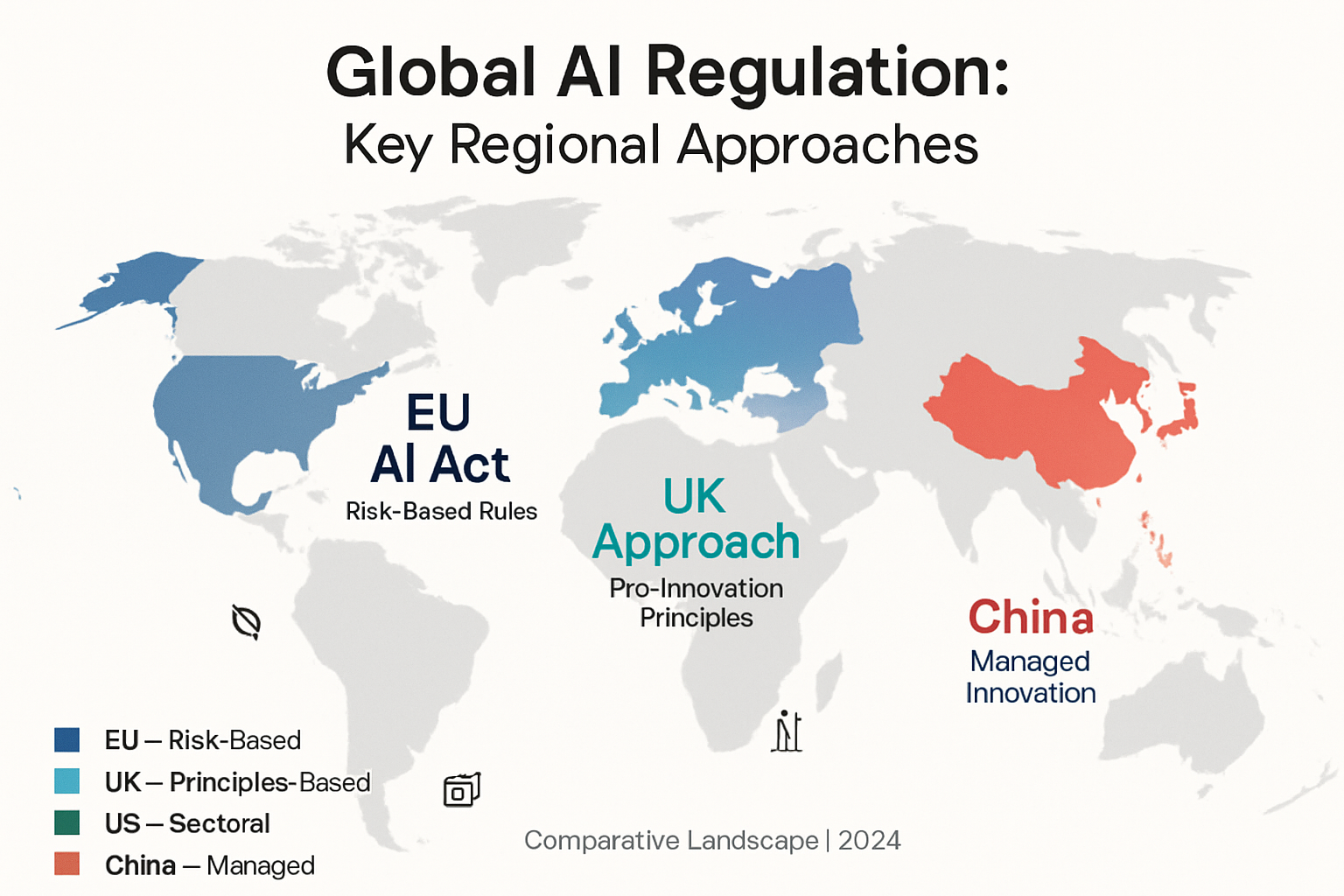

Regulatory Compliance: Navigate the evolving landscape of AI regulations like the EU AI Act and the UK's AI regulatory framework to mitigate legal and ethical risks.

Practical Implementation: Deploy scalable ethical practices through ethics-by-design approaches, comprehensive training, and automated compliance tools.

Risk Management: Adopt frameworks to identify, assess, and mitigate potential harms while maintaining adaptability in rapidly changing environments.

Introduction: Balancing AI Innovation with Ethical Responsibility

In today's rapidly evolving technological landscape, artificial intelligence has transcended from a theoretical concept to a transformative force reshaping industries across the globe. As organisations increasingly deploy AI to drive efficiency, enhance decision-making capabilities, and revolutionise customer experiences, the responsibility to ensure ethical implementation and regulatory compliance becomes paramount.

The intersection of powerful AI capabilities and ethical considerations creates a delicate balance that organisations must navigate carefully. This balance requires robust governance frameworks that align AI initiatives with ethical principles while accommodating diverse legal, cultural, and societal expectations across different markets and contexts.

Developing a comprehensive AI ethics policy extends far beyond mere regulatory compliance. It establishes a foundation for building trust with stakeholders, minimises risks such as algorithmic bias and privacy violations, and embeds crucial concepts such as transparency and accountability throughout the AI lifecycle. When executed effectively, responsible AI doesn't simply prevent harm—it actively fosters innovation that is fair, sustainable, and oriented toward positive societal impact.

This comprehensive guide explores the multifaceted aspects of AI governance and outlines practical steps organisations can take to embed ethical considerations into their AI operations. Drawing on real-world examples and industry best practices, it provides a strategic roadmap for bridging technological advancement with principled, responsible decision-making in the AI era.

Understanding AI Ethics Policy: Creating Standards for Responsible AI

What Is an AI Ethics Policy? Establishing Your Foundation

An AI ethics policy serves as a comprehensive framework that establishes clear guidelines for the responsible design, development, deployment, and use of artificial intelligence systems. These policies extend beyond technical specifications to address broader social, legal, and moral considerations, ensuring AI initiatives align with organisational values and maintain public trust.

Effective AI ethics policies typically encompass several crucial elements:

- Core ethical principles: Articulating fundamental values such as transparency, fairness, accountability, and privacy protection that guide all AI initiatives

- Regulatory alignment: Ensuring compliance with relevant legislation like the UK's AI regulatory framework, the EU AI Act, and the GDPR

- Operational guidance: Translating high-level principles into practical implementation strategies across the AI lifecycle

- Review mechanisms: Establishing processes for ongoing assessment and improvement of ethical practices

While conceptually straightforward, implementing these policies in real-world settings can be challenging. For instance, Google's pioneering 2018 AI Principles established ambitious goals, such as "Avoid creating or reinforcing unfair bias," but the company faced significant implementation hurdles due to the complexity of datasets and diverse cultural interpretations of fairness. This illustrates the critical need for policies that are not only aspirational but also actionable and adaptable to practical constraints.

Key Components of a Comprehensive AI Ethics Policy

1. Foundational Ethical AI Principles

The cornerstone of any effective AI ethics policy is a clear articulation of fundamental principles that guide all AI activities. Widely recognised principles include:

Transparency: AI systems should operate in an explainable manner, with decisions that can be traced back to specific inputs and processing logic. This is particularly critical in high-stakes domains like healthcare and financial services.

Fairness: Algorithms must be designed to avoid bias and actively mitigate discrimination, ensuring equitable outcomes across different demographic groups and contexts.

Accountability: Policies must establish clear roles and responsibilities, defining who is accountable for AI decisions and outcomes at each stage of the development and deployment lifecycle.

Privacy: Robust data protection measures must safeguard user information, ensure informed consent, and comply with relevant privacy regulations like the UK GDPR.

Participatory design approaches have proven essential in developing meaningful ethical principles. By incorporating diverse perspectives from technical teams, legal experts, ethicists, and potentially affected communities, organisations can create more robust and inclusive frameworks. Microsoft exemplifies this approach through its Responsible AI initiatives, which actively integrate stakeholder input while using tools such as InterpretML to enhance the interpretability of complex AI models.

2. Governance Structures for Ethical Oversight

Comprehensive governance structures ensure ethical principles translate into consistent practices across an organisation. Essential components include:

- Ethics Committees: Multidisciplinary teams responsible for reviewing AI initiatives, advising on compliance issues, and assessing ethical implications before deployment

- Auditing Systems: Independent mechanisms to evaluate AI systems against established ethical benchmarks and evolving regulatory standards

- Risk Management Protocols: Systematic approaches to identifying, assessing, and addressing ethical and operational risks proactively

HSBC provides an instructive example through its AI and Data Ethics Working Group, which conducts regular reviews to identify potential algorithmic risks and maintains ethical oversight of AI applications across the organisation. Their approach demonstrates how governance structures can adapt to the specific needs and risk profiles of different industries.

3. Policy Enforcement and Accountability Mechanisms

Without robust enforcement mechanisms, even the most well-crafted ethical principles remain ineffective. Organisations must embed accountability into their AI systems by:

- Assigning specific roles and responsibilities for ethical practices across different stages of AI development and operation

- Implementing comprehensive audit trails to ensure transparency in decision-making and facilitate compliance monitoring

- Establishing protected whistleblowing frameworks that allow employees to report instances of unethical AI use safely

BNP Paribas exemplifies effective accountability practices by mandating regular external audits of its AI tools, ensuring compliance with global regulatory standards, and enhancing its credibility with stakeholders. This approach demonstrates how third-party verification can strengthen internal accountability frameworks.

Building a Robust AI Governance Framework

Establishing Organisational Policies and Standards

Developing tailored organisational policies forms the foundation of effective AI governance. These policies should:

- Define acceptable applications of AI technologies, ensuring alignment with organisational values and societal welfare

- Detail specific procedures for evaluating algorithmic fairness and mitigating bias before implementation

- Establish clear compliance metrics that align with existing legal frameworks and industry best practices

Salesforce demonstrates the value of dedicated governance structures through its Office of Ethical and Humane Use of Technology, which embeds ethical considerations directly into the product development lifecycle. This approach ensures that ethical governance isn't merely an afterthought but an integral part of how AI solutions are conceived and developed.

Conducting Comprehensive Impact Assessments

Thorough impact assessments are essential for anticipating risks and ensuring equitable outcomes from AI systems. Key assessment techniques include:

Dataset Audits: Systematically examining training data to identify and address potential biases or inconsistencies

Ethical Scenario Planning: Analysing how AI systems might perform under different contexts and identifying potential unintended consequences

Stakeholder Engagement: Actively involving potentially affected populations in the development and assessment process

The UK Government's Data Ethics Framework provides valuable guidance on conducting impact assessments for AI systems in public sector applications. Similarly, tools like NIST's AI Risk Management Framework have become instrumental in standardising impact assessment practices across industries.

Implementing Monitoring and Continuous Improvement Protocols

AI governance frameworks must remain adaptable to keep pace with rapid technological advancements and evolving regulatory landscapes. Effective strategies include:

- Regularly updating policies based on stakeholder feedback and emerging best practices

- Publishing detailed transparency reports to foster accountability and build trust

- Utilising automated monitoring tools to track compliance across complex systems efficiently

Google's implementation of Model Cards to document AI system risks demonstrates an innovative approach to transparency and adaptability. These standardised documentation templates clearly articulate model limitations, performance characteristics, and ethical considerations, facilitating ongoing monitoring and improvement.

Operationalising Ethical AI Practices

Embedding Ethics into AI Development Lifecycles

For ethical AI to succeed, organisations must integrate ethical considerations throughout the entire development lifecycle. Effective tactics include:

Ethics-by-Design Models: Incorporating ethical constraints and considerations into AI tools from the earliest design phases

Algorithmic Pre-Launch Assessments: Conducting thorough reviews to identify potential unintended consequences before deployment

Maturity Frameworks: Establishing clear milestones to track progress toward comprehensive ethical practices

A notable example comes from Amazon, which restructured its hiring algorithm after discovering it unintentionally discriminated against female applicants. By incorporating fairness techniques and more diverse training data, the company demonstrated how ethical considerations can be embedded into iterative development processes.

Developing Employee Training and Ethical Awareness Programmes

Educating employees about AI ethics ensures that ethical priorities are consistently upheld across the organisation. Effective training programmes emphasise:

- Fundamental ethical principles such as fairness, transparency, and privacy-first approaches

- Real-world case studies analysing prior missteps in AI ethics as learning opportunities

- Practical tools and techniques for identifying and addressing ethical concerns in everyday work

IBM exemplifies best practices through its comprehensive staff training on ethical AI protocols, which combines theoretical knowledge with hands-on experience using ethics toolkits. Their approach demonstrates how organisations can build a culture of moral awareness that extends beyond technical teams to all employees engaging with AI systems.

Leveraging Tools and Technologies for Ethical Compliance

To operationalise ethical AI practices at scale, organisations increasingly rely on specialised tools and technologies:

Bias Detection Frameworks: Tools like IBM's AI Fairness 360 and Google's What-If Tool enable proactive identification and mitigation of algorithmic bias

Transparency Solutions: Platforms like Hugging Face's Model Cards facilitate documentation and sharing of model limitations and ethical considerations

Automated Compliance Tools: Solutions that continuously monitor AI systems for drift or unexpected behaviours that might indicate emerging ethical issues

The Alan Turing Institute in the UK has developed several open-source tools to support ethical AI implementation, demonstrating how technical solutions can complement policy frameworks in ensuring responsible AI practices.

AI Ethics Policy and Regulatory Compliance

Aligning Policies with Major AI Regulations

The regulatory landscape for AI is evolving rapidly, with frameworks like the EU AI Act and the UK's emerging AI regulatory approach setting new standards for responsible development. Compliance requires integrating practices such as:

- Risk categorisation frameworks that enable targeted regulations for high-risk AI applications

- Comprehensive privacy safeguards and user-centric transparency in system interfaces

- Documentation requirements that demonstrate due diligence in addressing ethical concerns

Telefónica provides an exemplary case study in regulatory alignment, working proactively with EU regulators to ensure its AI development efforts align with emerging legal frameworks. Their approach demonstrates how early engagement with regulatory stakeholders can facilitate more seamless compliance.

Ensuring Cross-Border Interoperability of Ethics Policies

Global organisations managing AI projects across multiple jurisdictions face unique challenges in maintaining consistent ethical standards. Effective approaches include:

- Monitoring global regulatory trends in real-time to anticipate and prepare for new compliance requirements

- Partnering with AI-focused legal experts to adapt governance frameworks to specific market contexts

- Developing modular ethics policies with core principles that remain consistent globally, while allowing for regional adaptations

Financial services provider ING demonstrates effective cross-border governance through its coordinated global AI ethics strategy, which ensures ethical compatibility across diverse regulatory environments while maintaining operational efficiency.

Balancing Compliance with Innovation

Striking the right balance between regulatory adherence and continued innovation represents a key challenge for organisations deploying AI. Successful strategies include:

- Establishing dedicated research labs focused on ethical experimentation within regulatory boundaries

- Leveraging regulatory sandboxes provided by authorities like the UK's Financial Conduct Authority for controlled testing of innovative applications

- Adopting agile governance approaches that can adapt quickly to technological and regulatory developments

OpenAI's development of ChatGPT in collaboration with oversight bodies exemplifies responsible adherence to compliance measures while pushing the boundaries of what's possible with language models. Their approach demonstrates how ethical considerations can coexist with groundbreaking innovation.

Practical Steps for Implementing an AI Ethics Policy

Step 1: Assess Your Current AI Landscape

Begin by conducting a comprehensive audit of your organisation's existing AI systems, identifying:

- All active AI applications and their associated risk levels

- Current governance practices and potential gaps

- Stakeholders impacted by AI systems, both internally and externally

The UK Government's Data Ethics Framework provides a useful assessment methodology that organisations can adapt for their specific contexts.

Step 2: Develop Core Ethical Principles

Establish foundational principles that reflect your organisation's values and commitments:

- Engage diverse stakeholders in collaborative workshops to identify shared ethical priorities

- Research industry-specific ethical considerations that might apply to your context

- Draft clear, actionable principles that can guide practical decision-making

PwC's Responsible AI Framework offers a valuable template for developing ethical principles that balance aspiration with practicality.

Step 3: Create Governance Structures

Implement oversight mechanisms to ensure consistent application of ethical principles:

- Establish an AI ethics committee with cross-functional representation

- Define clear roles and responsibilities for ethical oversight

- Develop escalation pathways for addressing potential ethical concerns

The NHS AI Lab provides instructive examples of governance structures specifically designed for sensitive healthcare applications.

Step 4: Integrate Ethics into Development Processes

Embed ethical considerations throughout the AI lifecycle:

- Create ethical checkpoints at key development milestones

- Implement standardised documentation practices for model characteristics and limitations

- Develop pre-deployment assessment protocols for high-risk applications

Microsoft's Responsible AI Impact Assessment Template offers a practical framework for integrating ethics into development workflows.

Step 5: Monitor, Evaluate and Iterate

Establish continuous improvement mechanisms:

- Implement regular audits of AI systems against ethical benchmarks

- Collect feedback from stakeholders affected by AI applications

- Review and update policies to reflect emerging best practices and regulatory changes

Frequently Asked Questions about AI Ethics and Governance

What is the difference between AI ethics and AI governance?

AI ethics refers to the moral principles and values that guide the development and use of artificial intelligence systems, focusing on questions of fairness, transparency, accountability, and harm prevention. It addresses fundamental questions about what AI systems should and should not do.

AI governance, meanwhile, encompasses the practical frameworks, policies, and processes organisations implement to ensure AI systems operate according to these ethical principles. Governance provides the operational structure through which ethical aspirations become a practical reality.

While ethics provides the "why" behind responsible AI practices, governance offers the "how", the mechanisms by which organisations can ensure their AI systems consistently align with ethical values and regulatory requirements.

How can organisations balance innovation with ethical constraints?

Balancing innovation with ethical considerations requires thoughtful approaches:

- Ethics-by-design: Integrate ethical considerations from the earliest stages of development rather than applying them retroactively

- Regulatory sandboxes: Utilise controlled environments for testing innovative applications while managing risks

- Stakeholder collaboration: Engage diverse perspectives to identify potential ethical concerns before they manifest in deployed systems

- Incremental deployment: Roll out AI systems gradually, allowing time to assess impacts before scaling

The UK's Centre for Data Ethics and Innovation offers valuable resources on balancing innovation with responsible AI practices.

What are the key regulations affecting AI ethics in the UK?

UK organisations must navigate several important regulatory frameworks:

- UK GDPR and Data Protection Act 2018: Govern the processing of personal data, including by AI systems

- UK's AI Regulatory Framework: Currently being developed to provide proportionate, pro-innovation governance of AI

- Sector-specific regulations: Additional requirements in fields like financial services (FCA guidelines) and healthcare (MHRA regulations)

- International standards: ISO/IEC standards on AI ethics and risk management that influence UK practices

The UK Government's AI Action Plan outlines the evolving regulatory approach to artificial intelligence.

How can small organisations implement AI ethics without extensive resources?

Small organisations can take practical steps toward ethical AI:

- Prioritise high-risk applications for more intensive ethical review

- Leverage open-source ethics tools and frameworks rather than building from scratch

- Join industry consortia to share knowledge and resources

- Adopt standardised documentation practices like Model Cards to communicate system limitations efficiently

- Focus on fundamental principles like transparency, fairness, and accountability

The Alan Turing Institute's Simplified Guide to AI Ethics provides accessible guidance for organisations with limited resources.

What metrics can organisations use to measure the effectiveness of their AI ethics programmes?

Meaningful metrics for AI ethics programmes include:

- Incident reduction: Tracking and reducing ethical incidents or near-misses over time

- Stakeholder trust: Measuring user confidence in AI systems through surveys and feedback mechanisms

- Audit results: Performance in internal and external ethical audits

- Training completion: Percentage of relevant staff who have completed ethics training

- Time-to-resolution: Speed at which identified ethical issues are addressed

- Diversity metrics: Representation of diverse perspectives in AI development teams and testing groups

Conclusion: The Strategic Imperative of AI Ethics

AI ethics policies have evolved from optional considerations to essential foundations for responsible, practical artificial intelligence. By prioritising principles like transparency, fairness, and accountability, organisations can not only mitigate risks but also foster the trust necessary for widespread AI adoption and impact.

The integration of participatory design approaches, robust governance frameworks, and comprehensive compliance strategies ensures that ethical considerations become seamless components of AI operations rather than afterthoughts. With regulatory landscapes evolving rapidly in the UK and globally, forward-thinking organisations must proactively align with emerging standards while maintaining the flexibility to adapt to new requirements.

Ultimately, an effective AI ethics policy represents more than a safeguard against potential harms—it constitutes a strategic asset that enables sustainable innovation and competitive differentiation. By implementing the practical steps outlined in this guide, organisations can navigate the complex intersection of technology, ethics, and regulation to deliver AI solutions that are not only powerful but also responsible and trustworthy.

The responsibility for translating ethical principles into practice rests with organisations that develop and deploy AI systems. By moving beyond theoretical guidelines to implement concrete actions, these organisations can help ensure that artificial intelligence advances in ways that reflect our shared values and contribute positively to society.

Ready to strengthen your organisation's approach to AI ethics and governance? Begin by assessing your current practices against the frameworks outlined in this guide, identifying priority areas for enhancement, and developing a roadmap for implementing more robust ethical safeguards across your AI initiatives.